Prototyping is important for both UX research AND UX design. In this post I talk about the importance of verisimilitude in both aspects of UX work. I often have conversations with other UX designers who have different opinions from me about the necessity of verisimilitude or the ability of design tools to create it, so I wanted to explain my opinion in detail here.

This essay assumes some familiarity with UX work. If you have any questions or comments, please let me know!

Terminology

Artboard-linking tools

Any type of technology that lets you define "artboards" as a grouping of elements, and using pre-defined hotspots, let the user click to navigate between them. I am grouping these together because in spite of some minor differences, they all fall down for the same reasons. This includes, as far as I know,

- Sketch

- Invision

- Figma

- UXPin

- Adobe XD

- Paper prototyping

- A bunch more I have tried over the years

If I'm wrong about any of these, please let me know and I'll update this post.

True interactive prototyping

I'm using this term to describe technology that allows the inclusion of conditional logic and the ability to manipulate data sets within the browser. As of right now, the only options for this are:

- Axure

- Becoming a front-end web developer

I don't want to give the impression that I'm shilling for Axure or that I think it's a perfect product. If another product came on the market tomorrow that does what Axure does without the weird bugs or the learning curve, I'd be all over it. But for now it's the only tool that I have come across in the 10 years I've been doing this that provides this functionality.

Verisimilitude

This is a word that I first learned in the context of film studies in which it's used to talk about how real, intuitive, and recognizable something feels to the viewer. In philosophy, it's also used to talk about how 2 statements can both be false, but one can be more or less false than the other.

I think both meanings have value in talking about UX because designing experiences that feel real, intuitive, and recognizable to users is extremely important.

In the context of UX research & design, because we're talking about simulations with varying degrees of realistic aspects, it's also important to recognize that there can be more than 1 way to simulate something, and one simulation can be closer to the truth than the other. The closer we stay to the truth, the more reliable the information we gather. Therefore it is actually possible for one type of prototype to be better than another even if they are both ultimately prototypes.

Different projects have different goals and requirements

UX projects for interactive systems are different from UX projects to create informational brochure websites whose primary purpose is to present some information and perhaps fill in a form or click a button. Unfortunately we just have the one term to describe both of them, and one dominant paradigm in available tools.

Imagine 2 designers.

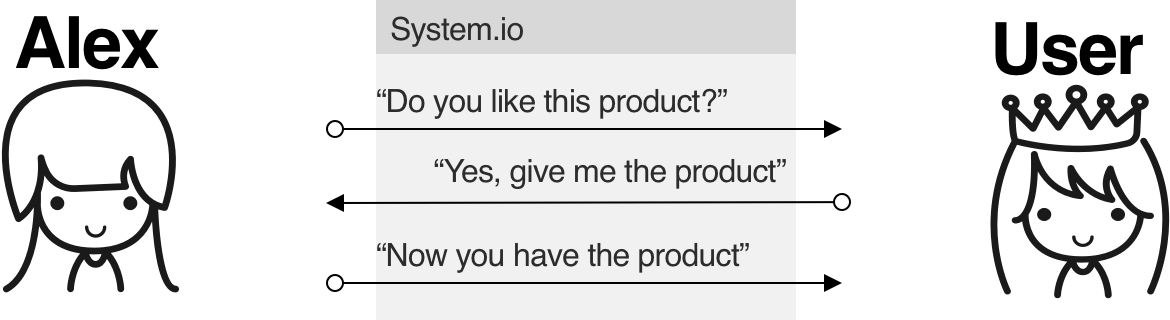

Alex works in the marketing department of a software company. They work on ensuring that the content of the website helps visitors understand the value of their software and makes it easy to purchase and download. This is a very important job because users need a way to be informed of products and services that can benefit them, and websites are a great medium for presenting information.

Pat works on the UX team of the software company. Their job is to make sure that once the software has been downloaded, the user is able to complete their tasks, and enjoys completing their tasks so that they will send new users to the website designed by Alex.

What Alex and Pat have in common is that they both create deliverables at progressive levels of fidelity that represent plans for which elements should be on each screen, what they should say, where they should go, what they should look like, and what they should do.

The difference between their work assignments is that the systems that Pat designs have more moving parts.

Here is what Alex is working towards. This kind of input and output is very easy to test with Sketch, Invision, or many other similar tools that basically do the same thing: Allow you to define the look & feel of a web page and link pages together.

This is a story with a beginning, a middle, and end, all of which are easily simulated.

To test the effectiveness of this design, Alex can simply put his deliverables in front of target users and see how well they perform. That's because artboard-linking tools simplify this process for Alex by removing the need for developers to link the pages together. That's great. The only thing that needs to be simulated is the exchange of money for goods and services, which is so familiar to most of us by now that test users will easily understand how to imagine this step in the process and still be able to evaluate the effectiveness of the design, even if they have never seen the design before. The only new thing being introduced to the test user is the content and the way the content is presented, which is completely within Alex's control.

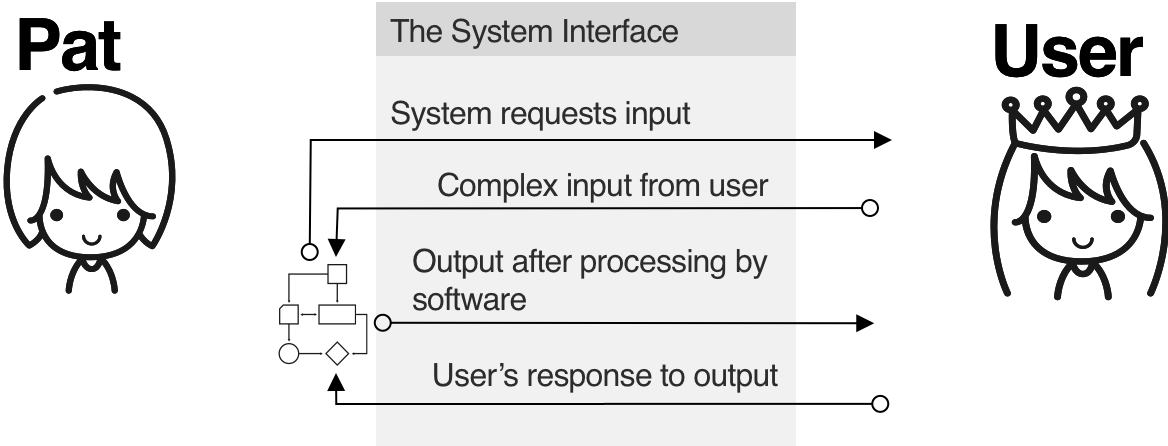

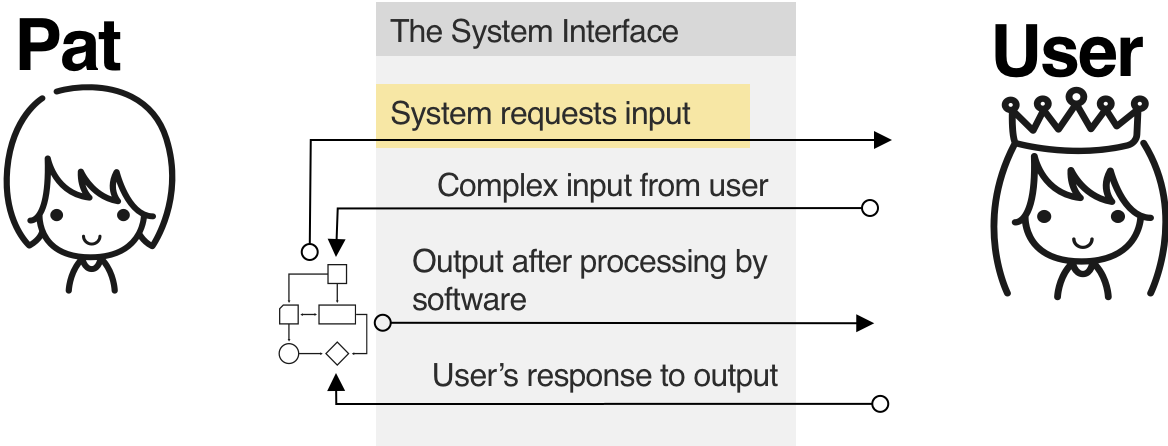

Here is what Pat is working towards. As you can see there are many more moving parts, and I don't mean animations.

This is an open loop that can continue on indefinitely with several aspects that require simulation. The important thing to remember, especially for non-UX team members, is that the system will not work without the correct input from the user, no matter how good a job you did coding the engine. The point of all this user-centered design stuff is to have someone on the project team who specializes in making sure that the human-shaped half of the system is taking in, processing, and outputting information correctly.

Differences between UX prototypes and engineering prototypes

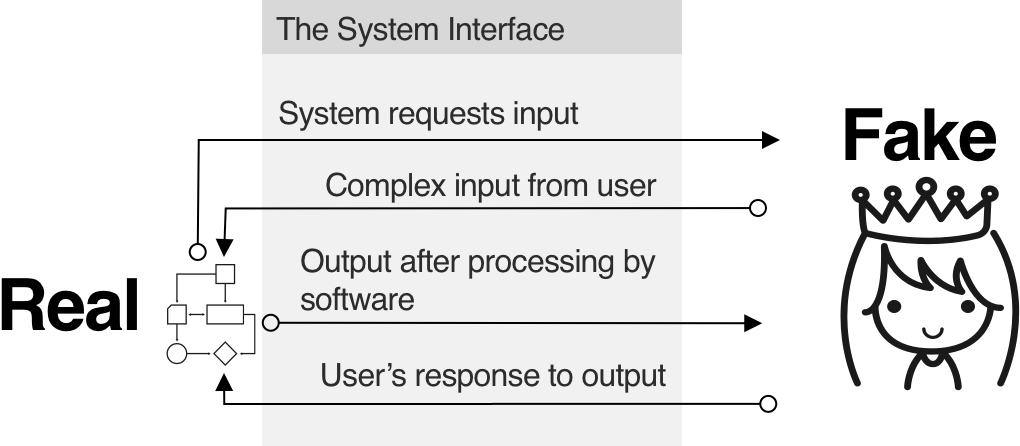

In an engineering prototype, the system is really providing the output, while the user of the system is not intended to be real yet. So we are faking the presence of the user by providing the kind of responses we are planning to collect.

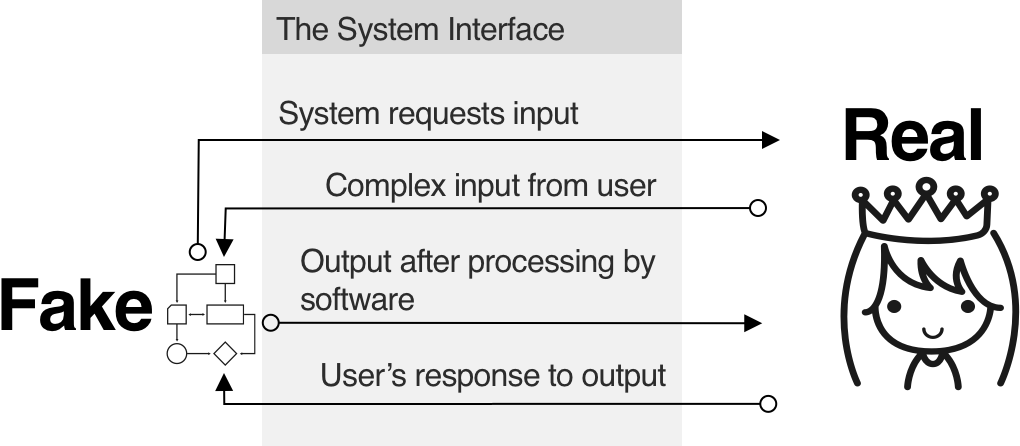

In a UX prototype, the system is not intended to be real, but the user is. We're knowingly faking the system by providing the responses we are planning to provide, but the user should be a real user, or as close to that as we can get.

A well-designed system should have both sides of this equation tested, iterated, tested, and iterated again before launch to ensure everything works. In both of these cases, the “Fake” partner in each prototype must be as close to real as possible, or we won’t be able to make accurate predictions about the real system.

Sometimes stakeholders outside of UX may see an interactive prototype and assume it's an engineering prototype, or a UX prototype that think it's an engineering prototype. This can be remedied by informing the stakeholder of the differences between these two types of prototypes.

Elements of an interactive system and how they get translated into UX prototypes

In this section I will go into more detail about the illustration of Pat's interactive software shown above, and why achieving verisimilitude using artboard-linking tools is more challenging than it needs to be.

1. The system's request for input

All software needs something from the user before it can start doing it's thing, and making this clear to first time users is one of the hardest parts of UX and the most critical to get right.

With artboard-linking tools, Pat can usually do a pretty good job of simulating this as long as only one thing is changing on the screen at a time. If 2 things need to change on the screen and the order of the changes isn't predictable, this case cannot be simulated in artboard-linking tools.

Pat can create a version that forces the test user to make the changes in a particular order, but what it looks like when the changes are made in a different order isn't being truly discovered or tested, even though it can have a significant effect. Pat can try to guess at what those states might look like and create artboards for the developer spec. But this is Pat's educated guess about what that will look like, and guesses are great, aren't they? We love guesses. It's very inexpensive to build, market, and launch a whole system that depends on people using it based on a guess about whether or not people can use it.

Using true interactive prototyping, Pat can set up all the things that change on the screen and try them out in different orders to see what happens in order to fully understand and evaluate the experience. Later on, we can observe the order in which test users make the changes instead of dictating it to them, and from this we get information about how to tailor the design to real users.

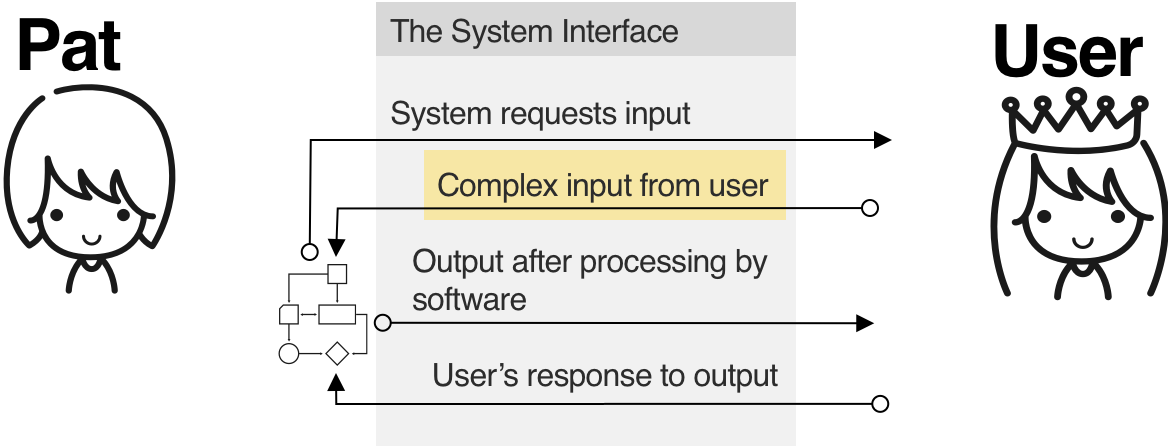

2. The input provided by the user

This can take the form of keyboard or voice input, interactive multi-state form controls, selecting objects that are already in the system, creating a schematic on a canvas, uploading files, or anything else.

It's usually not as simple as clicking on something that can be represented by a predefined hotspot, so Pat will have to force it into the shape of something that can be represented by a predefined hotspot. Any time you change one thing into another, a little something gets lost, and you need all kinds of sensitive scientific equipment to figure out what and how much. Again, Pat has to just make do with an unrealistic simulation and hope it's good enough without ever being sure.

Instead of being able to test the experience of typing into the form field, for example, the test user is clicking on the form field, into which some predefined output appears. Without having actually done the typing and then found the submit button, or navigated the list of files, or dragged and dropped, the test user is giving us a guess about how they think they would feel if they were really using this thing they have never seen before and might barely understand.

Using true interactive prototyping, while it's still a simulation, Pat doesn't have to manipulate something into the shape of something it's not. A keyboard input can just be a keyboard input. This way the test user can see their actual input come up on the screen and let us know how that felt for them.

Which prompt do you think is more likely to elicit a response from the test user that accurately conveys their feelings:

- "Tell us how you felt about the thing that happened a second ago"

- "You just saw some text you didn't know was going to appear after you clicked on a picture of a form field. Think back to all the other software you've ever used and compare this indirect simulation of something you've never seen before, then tell us how you think this might make you feel in the future under different conditions if you knew what the outcome would be." Pat is asking this user to employ more memory and imagination than Dungeons and Dragons, and we're only halfway through one task.

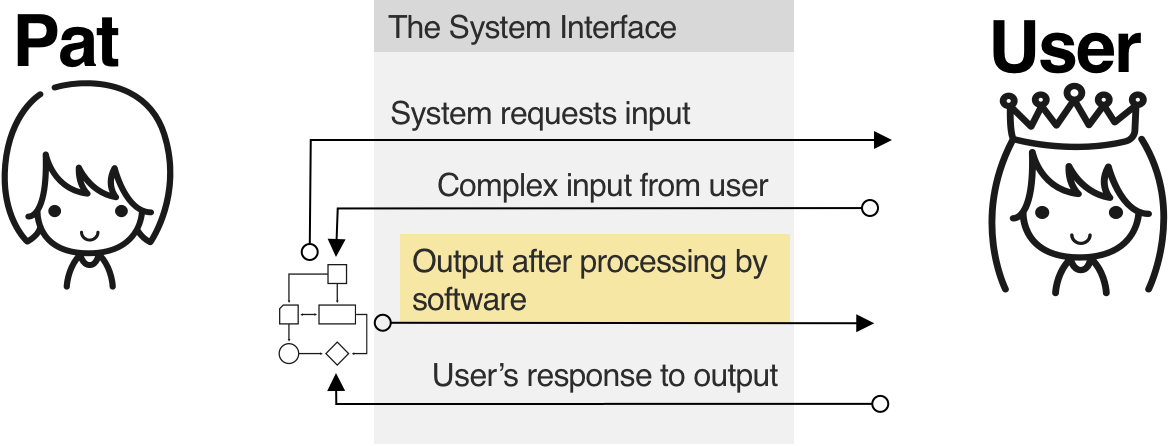

3. Output after processing by software

The purpose of software is to take some information and do something to it that's either too time-consuming or difficult to do in our own heads. So getting the output from the software is the entire point.

Here are some undeniable facts about software:

- A software's output changes based on different inputs. You can just use a typewriter and a photocopier if you don’t need things to change based on inputs.

- A software's output output is usually pretty complicated. Complicated means consisting of different and connected parts in an intricate pattern. Even when we were using typewriters, we were still doing all kinds of very complicated things. Most things people do are complicated in one way or another.

Artboard-linking tools have no capability to stand in for anything other than a simple HTML web page. Pat has to come up with a lot of fancy tricks in setting up her artboards to mimic the changing AND complicated output of her system to make it feel something like the finished product will probably, hopefully feel to use. If successful, Pat is making yet another guess, and getting back more guesses in return. And if unsuccessful, Pat has to hope some developer has time to build something. In my experience, there are many things that software does that can't be simulated to any degree just by linking static artboards together.

As a true interactive prototyping tool, Axure lets Pat create a realistically complicated data set that can be changed by both the user and the prototype itself based on real (or real enough) input.

In order to make this happen, Pat has to have some understanding of what is actually happening to the data, and this must be verified with the engineering team. This helps Pat create a deeper understanding of the user's requirements in order to find the best way to present the output, as well as strengthening cross-team relationships.

Of course even with Axure some things still can't be reasonably simulated by a UX designer alone - I have come up against these limits several times according to Axure's support team. But the probability of that is lower because the number of cases are smaller.

The end result is the test user seeing output that has a concrete relationship to their input, which makes it easier for them to compare this experience to other experiences in order to let us know how they feel about this one.

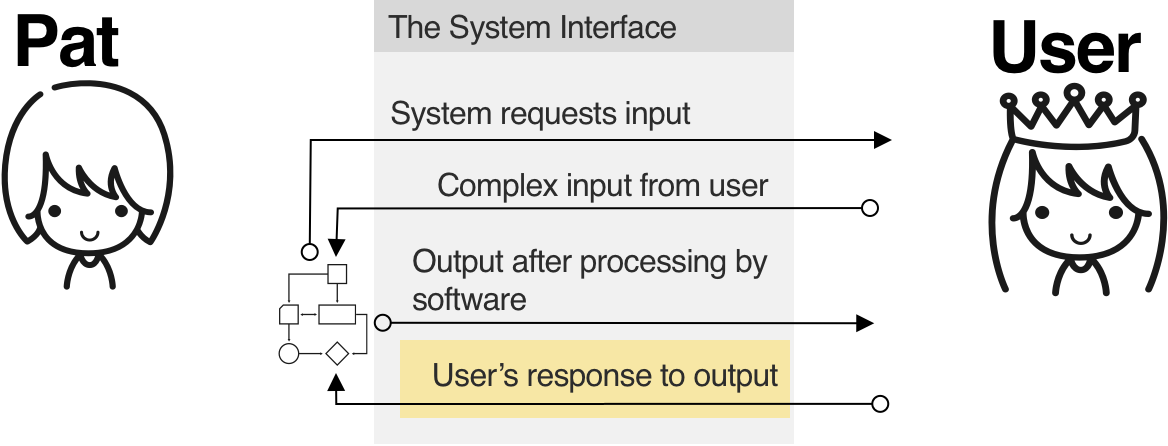

4. User's response to output

In the previous step we were only talking about representing the output of the system. Now we have to plan and test how the user will respond to that output.

There are usually 2 ways this can happen:

- The user has just completed one part of a task and needs to keep going. The user must respond to the output now.

- The task is complete and the output from the previous step is stored. The user might have some follow up tasks to complete for this item later, but for now they can start from the beginning or go do something else.

A real user will be immersed in the context of both performing the input and understanding and acting on the output. Over time they will develop a fluency in knowing what kind of input results in what kind of output, even if they don’t understand why. A test user has no such context, so that's another thing Pat has to simulate.

Using the simulated output that was purported to be the result of the simulated input, Pat must now create some artboards that collect the user's simulated input again, from a test user who has only been clicking pre-defined hotspots this whole time, rather than the whole host of mental activities a real user would have experienced. Doesn't that sound like, perhaps, two very different experiences that can't really be compared?

At least if the test user is seeing the result of their own input, they are better suited to compare that to the output and tell us if that was what they expected and why. Even if they have never seen this system before, they should have some experience putting information in and getting it out that they can compare this to.

In conclusion, verisimilitude in UX research and design is a land of contrasts

Manually designing and linking artboards for each of the 4 states described above, with multiple variants to represent alternate conditions, over several iterations during the design process, at multiple stages of fidelity, and for a potentially huge number of individual tasks and screens, produces poor verisimilitude. On top of that, the process itself is inefficient, time-consuming, error-prone, and unscientific.

I understand why design organizations want to limit the proliferation of expensive licenses, accounts, trainings, and share link formats. But at least that makes more sense than pretending something is the right tool for every job when it clearly isn’t.

As a UX designer, I would be troubled by asking Pat to use the same software as Alex even though they have wildly different needs. And yet we all do it every day, even as we write personas to save other folks from dealing with software that isn't suited to their needs.